+++ Description = “Using clustering on character sequences” date = “2021-04-29” draft = false menu = “main” title = “Sequence clustering” toc = true type = “page” +++

Epistemic status: Random thoughts with little in the way of empirical evidence, yet.

Problem

We have have sequence of tokens. We wish to know the probability distribution of the next token given the preceding tokens.

A lot of people have attempted to do this.

For the sake of simplicity, let us assume that our tokens are characters of text or to be more precise, bytes, one of 256 distinct symbols.

A bytes can be stored in 8 bits. However, it is not a bit string of length 8. No characters are are of a lesser hamming distance to others.

Character embedding

We wish to embed the 256 characters in a mode2 space such that we can easily separate them into different groups via a simple diagonal cut.

Consider if instead we take the bits of the 256 8 bit integers as a 256x8 bit grid. We transpose this into an 8x256 bit grid, or 8, 256 bit strings.

The 0th bit string is of the form

0101010101010101...

The 1th, of the form

0011001100110011...

the 2th of the form,

0000111100001111...

Then, for each of the 256 8 bit integers, we map the 8 bits of it over the 8 bit strings, bit flipping the 256 bit string accordingly, and then average the 8 bit strings together to produce a single 256 bit string.

Now we have 256, 256 bit strings.

This is equivalent to the following function:

fn(x: u8, y: u8) -> bool {

(x^y).count_ones() > 4

}

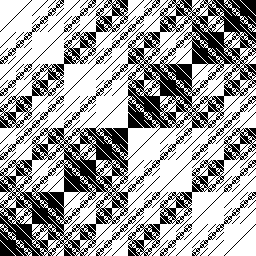

If we map this over a 256x256 pixel image, we produce the following image.

This is a form of munching squares.

We can now map a single byte of 8 bits into a 256 bit string. To do so, we use the bytes to index into our 256x256 bit matrix and select a row of bits. To decode, we take our 8x256 bit matrix mentioned earlier, popcount each row with our 256 bit encoded form and threshold at 128 to obtain the 8 bits of our byte.

This is an extremely wasteful, but quite robust, error correcting code.

Now our 256 bytes are embed into corners of a 256 dimensional mod2 cube.

We can select corners of this cube and separate by distance, that is, make diagonal cuts through this cube, to separate bytes by whatever attribute we wish. The cost is exponential, but it a powerful embedding.

n-gram model

Let us consider a very simple model. Given n bytes, predict the nth byte. For example, if n is 2, we use bytes 0 and 1 to predict byte 2.

This is a very primitive model and will not produce very good results, but it is simple.

If we expand our bytes into 256 bits as discussed previously, for n=2, we have 512 bits of input and wish to obtain likelihoods for each of the 256 possible target bytes. To look at it differently, we have a 512 dimensional mod2 cube. We wish to divide it into 256 partitions such that the correct target bytes in selected as often as possible.

Averaging

If we split out training set by target bytes, we have now have 256 classes, for each we count the number with each of the 512 input bits set, obtaining a (usize, [u32; 256]). We can then threshold and bitpack this to obtain the corners on the 512 mod2 input cube closest to that class.

Now we have 256, 512 bit strings.

We can compute hamming distance with our input and pick the closest.

Question: How big should the counters be?

Clustering

Note: In this post I will refer to Lloyd’s Algorithm and k-means clustering interchangeable. They are not the same, but are similar, and in mod2 space I do not understand the difference.

Consider a set of corners on our 512 dimensional mod2 cube. They are not randomly distributed. They cluster. We do need not need 512 bits to well approximate the inputs.

Starting with k random 512 bit strings, a few iterations of Lloyd’s algorithm will well center them on the clusters of the data set.

Now our dataset

Per class clustering

Consider, when averaging each class, we obtained a single corner for each class. In many cases, this is well and good, however it cannot learn XOR. Some target bytes want multiple disparate corners of the input space.

We can combine per class averaging with clustering to produce a small set of corners for each class. Now we have c*k features.

Are these good features?

Layers

n-gram models have limited context windows. We can increase n but this is expensive and will loose generalization.

n-gram feature generation allows us to see back n steps into the past. What if we stack l layers? Now we can see n*l steps. This is an improvement, however the context span is linear with depth. If we use strided convolution, we can achieve context exponential with depth.

We might, for example, use an n of 2 and stride of 2. This would achieve exactly once usage of each input. Or, we could use an n of 3 and a stride of 2. This would tend to preference more recent information while still permitting older information to be used, which we want. One could imagine all manner of sachems to incurring more recent information to be used, while preserving exponential span.

A

| n | counter | shape | time |

|---|---|---|---|

| 256 | u8 | [[(); 8]; 32] | 70.306439ms |

| 256 | u16 | [[(); 8]; 32] | 54.794909ms |

| 256 | u32 | [[(); 8]; 32] | 74.536098ms |

| 256 | u64 | [[(); 8]; 32] | 70.471861ms |

| 256 | u8 | [[(); 16]; 16] | 136.807488ms |

| 256 | u16 | [[(); 16]; 16] | 59.667724ms |

| 256 | u32 | [[(); 16]; 16] | 61.264961ms |

| 256 | u64 | [[(); 16]; 16] | 62.063218ms |

| 256 | u8 | [[(); 32]; 8] | 130.348585ms |

| 256 | u16 | [[(); 32]; 8] | 61.889853ms |

| 256 | u32 | [[(); 32]; 8] | 56.826211ms |

| 256 | u64 | [[(); 32]; 8] | 60.127326ms |

| 256 | u8 | [[(); 64]; 4] | 68.602001ms |

| 256 | u16 | [[(); 64]; 4] | 83.279447ms |

| 256 | u32 | [[(); 64]; 4] | 74.519827ms |

| 256 | u64 | [[(); 64]; 4] | 91.757619ms |

| 256 | u8 | [[(); 128]; 2] | 170.759958ms |

| 256 | u16 | [[(); 128]; 2] | 152.370505ms |

| 256 | u32 | [[(); 128]; 2] | 146.694984ms |

| 256 | u64 | [[(); 128]; 2] | 318.387502ms |

B

| n | counter | shape | time |

|---|---|---|---|

| 256 | u8 | [[(); 8]; 32] | 70.177225ms |

| 256 | u16 | [[(); 8]; 32] | 55.305374ms |

| 256 | u32 | [[(); 8]; 32] | 74.098073ms |

| 256 | u64 | [[(); 8]; 32] | 72.278759ms |

| 256 | u8 | [[(); 16]; 16] | 133.025975ms |

| 256 | u16 | [[(); 16]; 16] | 59.137326ms |

| 256 | u32 | [[(); 16]; 16] | 60.725917ms |

| 256 | u64 | [[(); 16]; 16] | 61.484059ms |

| 256 | u8 | [[(); 32]; 8] | 18.871828ms |

| 256 | u16 | [[(); 32]; 8] | 20.718032ms |

| 256 | u32 | [[(); 32]; 8] | 24.71875ms |

| 256 | u64 | [[(); 32]; 8] | 62.126866ms |

| 256 | u8 | [[(); 64]; 4] | 56.91779ms |

| 256 | u16 | [[(); 64]; 4] | 57.11475ms |

| 256 | u32 | [[(); 64]; 4] | 60.558352ms |

| 256 | u64 | [[(); 64]; 4] | 119.201621ms |

| 256 | u8 | [[(); 128]; 2] | 114.984446ms |

| 256 | u16 | [[(); 128]; 2] | 102.325288ms |

| 256 | u32 | [[(); 128]; 2] | 441.049716ms |

| 256 | u64 | [[(); 128]; 2] | 437.152562ms |